Blog

Scalable Twitter Self-censorship Using the rtweet Package

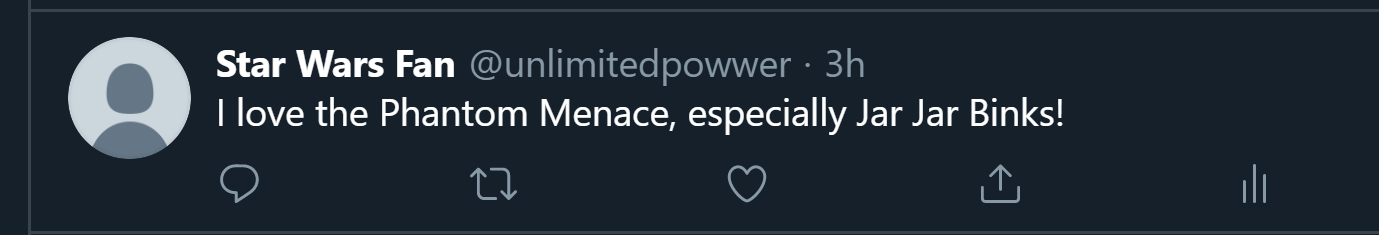

Twitter should be a place where you are free to post anything. But sometimes with this much freedom, you could easily go too far and post something controversial, like this one:

You might quickly proceed to forget that you’ve ever posted the stupid tweet, but there’s no guarantee that no one will ever find it in the future, and before you know it you are #canceled.

Is there a way to prevent this? In this post I will demonstrate a way to programmatically find and delete your tweets based on defined keywords, to ensure your regrettable bad takes are forever erased from the internet. To do this we will be using the rtweet package in R, which is a wrapper around Twitter’s API.

To begin, we first load the packages we will be using.

library(rtweet) # twitter api

library(dplyr) # handling data

library(purrr) # functional programming

We will need to obtain an access token from Twitter. If you call any rtweet function without having obtained one first, the package will conveniently open a web page that allows you to obtain a token with the twitter account you’re currently logged in. But here to be explicit, we will call get_token(), which will prompt rtweet to try to obtain a token by openning a web page, where you need to manually agree.

get_token()

If successful, you should see output like this:

<Token>

<oauth_endpoint>

request: https://api.twitter.com/oauth/request_token

authorize: https://api.twitter.com/oauth/authenticate

access: https://api.twitter.com/oauth/access_token

<oauth_app> rstats2twitter

key: <your_key>

secret: <hidden>

<credentials> oauth_token, oauth_token_secret, user_id, screen_name

Now we can start requesting data from Twitter. We will call get_timeline() to get all tweets posted by a user, identified by their twitter handle. rtweet:::home_user() is the handle of the user that our access token is associated with. Note that n, the max number of tweets to return, cannot exceed 3200. This should return a dataframe where each row is a tweet. The text column contains the text of the tweet, as expected.

After obtaining the tweet data, we can filter the tweets based on some keywords. My keyword here is jar since I want to get rid of any tweets involving Jar Jar Binks. But since we’re using grep to match strings, you could write any regex pattern to filter your tweets.

bad_stuff <- get_timeline(user=rtweet:::home_user(), n=1000) %>%

filter(grepl('jar', text, ignore.case=TRUE)) # filter based on keyword match

bad_stuff %>%

select(text)

We can inspect which tweets contain the keyword.

# A tibble: 2 x 1

text

<chr>

1 Just bought a jar of peanut butter, can't wait to make myself a PBJ!

2 I love the Phantom Menace, especially Jar Jar Binks!

Oops, turns out another tweet also matched the keyword jar even though it’s unrelated to Jar Jar Binks! This makes the task a little trickier as we can’t just delete every tweets returned by the API. Now we could refine our keyword, but the approach I take here will be just manually adding a boolean flag to indicate whether we actually want to delete a matched tweet. The workflow can be flexible. You can even export the dataframe to a csv file, go over and mark each tweet, before loading them back to R.

## human in the loop intervention

bad_stuff <- bad_stuff %>%

mutate(purge=TRUE) # initialize boolean flag

bad_stuff <- bad_stuff %>%

mutate(purge=ifelse(status_id=='<id of tweet to exclude>', FALSE, purge))

bad_stuff %>%

select(status_id, purge, text)

Now, we have indicated which tweet we don’t want to be deleted.

# A tibble: 2 x 3

status_id purge text

<chr> <lgl> <chr>

1 12230461081649029~ FALSE Just bought a jar of peanut butter, can't wait to make~

2 12230454003131269~ TRUE I love the Phantom Menace, especially Jar Jar Binks!

The id of any tweet we do want to delete can now be stored.

to_purge <- bad_stuff %>%

filter(purge) %>%

pull(status_id)

The final step is to call the Twitter API to delete each unwanted tweet. This is accomplished by calling post_tweet() and supplying destroy_id. We could use a good old for loop to iterate over the status_id of each tweet. But a tidyverse solution would be using the purrr package’s functional programming interface by calling map, which applies a function over a supplied vector of elements.

## for each status_id, delete the corresponding tweet via API

to_purge %>%

map(~ post_tweet(status='', destroy_id=.x))

If the delete is successful, you should see this message printed:

your tweet has been deleted!

Rejoice! You have learned the dark side of the Force. Here is all the code in one block. Note there is no human intervention involved in this one so it could be dangerous.

get_timeline(user=rtweet:::home_user(), n=1000) %>%

filter(grepl('jar', text, ignore.case=TRUE)) %>% # filter based on keyword match

pull(status_id) %>%

map(~ post_tweet(status='', destroy_id=.x))

Now there are two of them

We could stop here, but an additional optimization is to introduce parallelization, since deleting one tweet has no effect on deleting another. This means we can effortlessly parallelize the whole operation, thereby decreasing overall execution time, using the furrr package, which is basically purrr with futures. Replacing purrr::map() with furrr::future_map() is the only change needed.

library(furrr)

plan(multiprocess) # enable multiprocessing

to_purge %>%

future_map(~ post_tweet(status='', destroy_id=.x))

Voila. Now you can finally sleep easy at night, knowing that no one will ever discover your wrong Star Wars opinion.

![]()